The 4 layers of a Dialogflow bot

When you are building chatbots using Dialogflow, it helps to think of the bot as having 4 layers.

These are the layers:

- UI Layer

- Middleware/Integration Layer

- Conversation Layer

- Webhook/Fulfillment layer

Let us look at these in more detail.

Rich Website Chatbot

If you haven’t seen it already, I have a website chatbot on my site which has some features you don’t see in the integrated 1-click web demo.

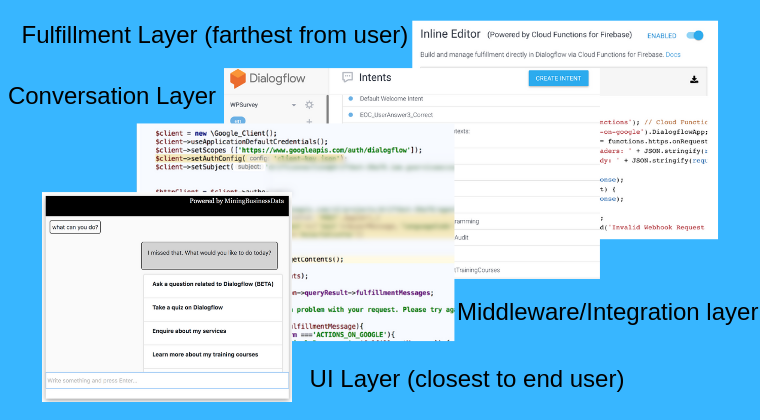

And the rich website chatbot is a very good example, because I could create this nice little graphic for it which shows all the 4 layers:

The 4 Layers

UI Layer

The UI layer is the one which is closest to the end user. It is usually visual, and it is the layer which the user actually interacts with. It is the layer which is nearest to the end user, as you can see in the graphic.

Middleware/Integration Layer

The middleware or integration layer connects the UI layer to your Dialogflow agent. Usually, this layer will have code which calls the detectIntent API method, which is required for the integration. (It could also have other code in some cases).

Conversation Layer

This is all the stuff you define inside the Dialogflow app – the intentsBoth Dialogflow ES and Dialogflow CX have the concept of int... More, entitiesBoth Dialogflow ES and Dialogflow CX support entities and th... More, contextsContexts are used in Dialogflow ES to manage the state of th... More etc.

Webhook/Fulfillment Layer

WebhooksYou can use webhooks to add custom business logic in both Di... More are required in Dialogflow to implement even basic business logic, like adding two numbers. This is the fourth layer, and farthest from the user.

Do the layers overlap?

Yes, it is sometimes hard to define where a layer ends and where the next one begins. While this is a useful concept when trying to understand what is going on in a Dialogflow chatbot, you don’t have to assess every single line of code and wonder which layer it belongs to.

Do all layers exists in all chatbots?

Not really.

For example, in theory, you could create a pure FAQ chatbot which has no business logic at all. Such a bot will not have a fulfillment layer.

You could build an email based interface to a Dialogflow bot – that is, the bot can answer your queries via email. While you might say the UI is your email client, in theory the client doesn’t have to exist for your bot to function. (For example, you could call the API of an email provider like SendGrid with the contents of the email, and your “bot” will work just as well).

In the case of a Voice based app for Google Home, there is no visual interface at all. Although some people do refer to it as a “voice user interface”.

Why does it matter?

Often, when discussing website chatbots, it helps to understand which layer is created by you (the developer), which layer can be hosted by other companies/services etc. The 4 layer approach helps to clarify this.

About this website

I created this website to provide training and tools for non-programmers who are building Dialogflow chatbots.

I have now changed my focus to Vertex AI Search, which I think is a natural evolution from chatbots.

Note

BotFlo was previously called MiningBusinessData. That is why you see that watermark in many of my previous videos.