Dialogflow ES Unit Testing Framework

Dialogflow Accuracy

This article provides a framework for adding unit testing to your Dialogflow ES bot.

Introduction

Recently I saw a question on StackOverflow about Dialogflow ES testing framework.

Dialogflow CX does have built-in Test Cases. That is one more reason why CX is more powerful and better suited for building complex flows than ES.

However, there is no standard framework for ES.

If you want to do conversation testing, my suggestion is to just use the pytest library and do it inside the PyCharm IDE (which has a free community edition).

In this article I will talk about a bunch of things which you should consider when creating test cases for Dialogflow ES.

Verbatim vs non-verbatim test phrases

While it might make sense to use an existing training phrase as a test phrase, in practice that is not a good idea. I explain why in this video:

As you can see from the video, using the exact training phrase does not give you much additional insight. Instead, you should add a word to the end of the sentence like “blah”.

Let us call such test phrases extended test phrases (that is, they are non-verbatim test phrases).

Generating test scripts

In Dialogflow CX, test scripts are generated in the simulator (where you can just save the Test case).

But you don’t have this option in Dialogflow ES. So you need to generate your own test scripts.

And test coverage for pure FAQ chatbots is very different from test coverage for multi-turn chatbots.

Test coverage for pure FAQ chatbots

When we are generating test scripts, we should consider test “coverage”.

Pure FAQ chatbots are those which do not have any contexts (or slot filling) and don’t answer follow up questions.

For these, you want every intent to be represented by a single test phrase (during a given test run).

The simplest thing to do here is to take a random training phrase per intent, add the word “blah” as the penultimate word, and use the REST API to test it.

Note: be careful if you have one word training phrases. Often these are not worth testing in any case. But if it is actually a single word, just use the full word as the test phrase.

Test coverage for multi-turn chatbots

If a chatbot has multiple conversation turns, you are testing for two things – both the flow sequence and the intent mapping accuracy.

You need to also make sure every intent is covered in the test script, and then you should select a test phrase from the intent.

The simplest way to do this for both pure FAQ chatbots and for multi-turn bots is to convert your Dialogflow ES agent ZIP file into a flat CSV file and then use the CSV to generate the test script.

How to automatically generate the test script

I have built a tool which allows you to automatically generate the test script for your ES bot.

Go to the tool page and click on the “How to Use this Tool” button, or click here to read the tutorial.

Running Test Scripts

Once you have flattened your ES agent ZIP file into a CSV file, you can use it with PyCharm and run your test scripts.

In my Improving Dialogflow ES accuracy course, I explain how you can set up Automated Conversation Testing using Python.

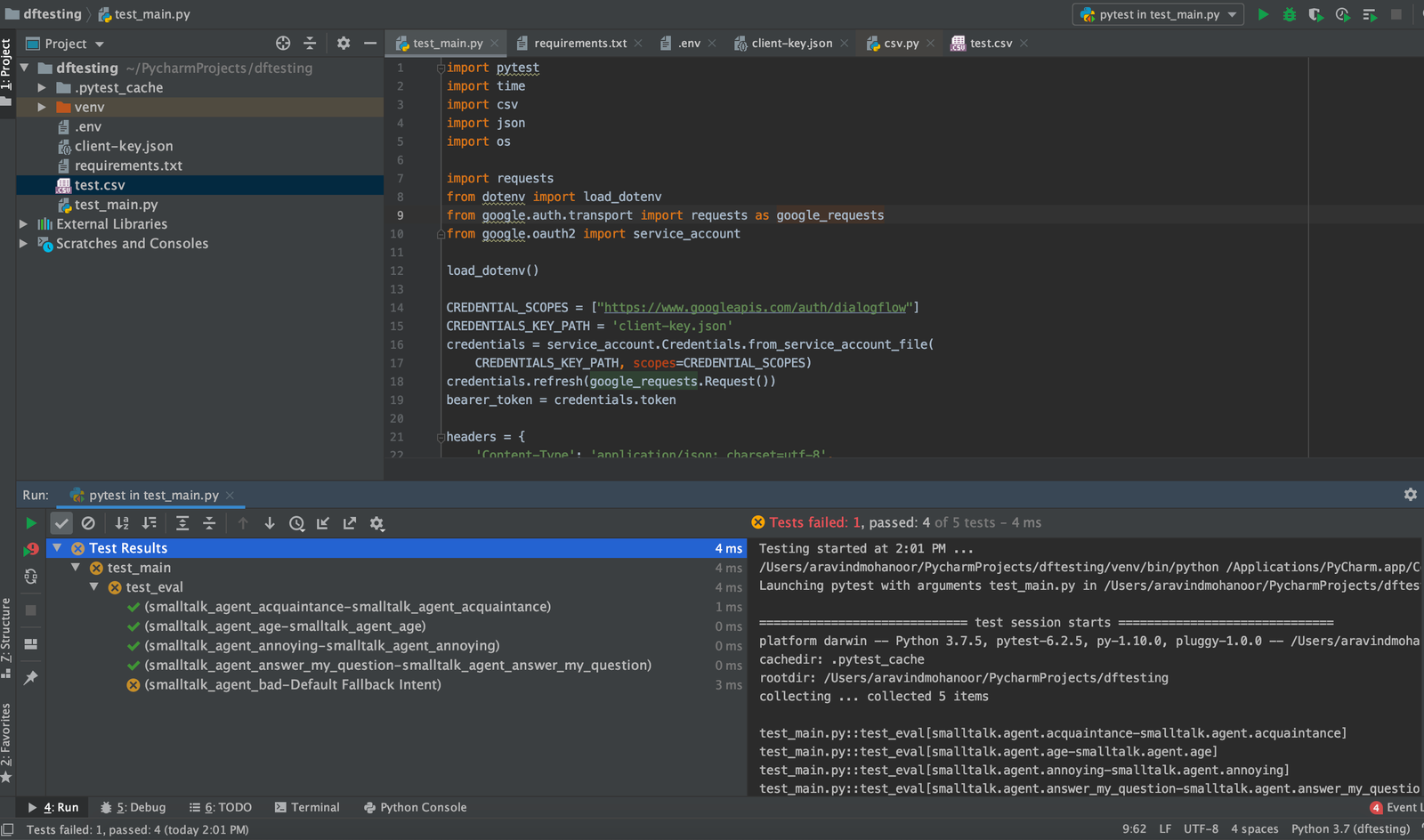

Here is a screenshot of the desired result. Using pytest and PyCharm, you can very easily set up automated conversation testing for your Dialogflow ES agent. As you can see in the screenshot, this allows you to quickly check if your existing intents are still working as expected.